This was software project. The aim was to build software that simulates 3D abrasive processes in performant way, while making the code base easily extendable and maintainable. For these reasons, it was decided that we would use Python to program it.

Then the main challenge was presented - 1. Make the simulation fast - 2. Make the software design great

Achievement

What we achieved after we complete this software was: 1. Entire code base is designed in object-oriented principles using Python and C++ 2. 95% Test Coverages reached 3. CI/CD pipeline setup on Github Actions 4. Automatic Software versioning and releasing on Github 5. Runtime performance was 10x faster than the V0 of the code base

Now in the following section I will briefly introduce how this was achieved.

Problem Abstraction

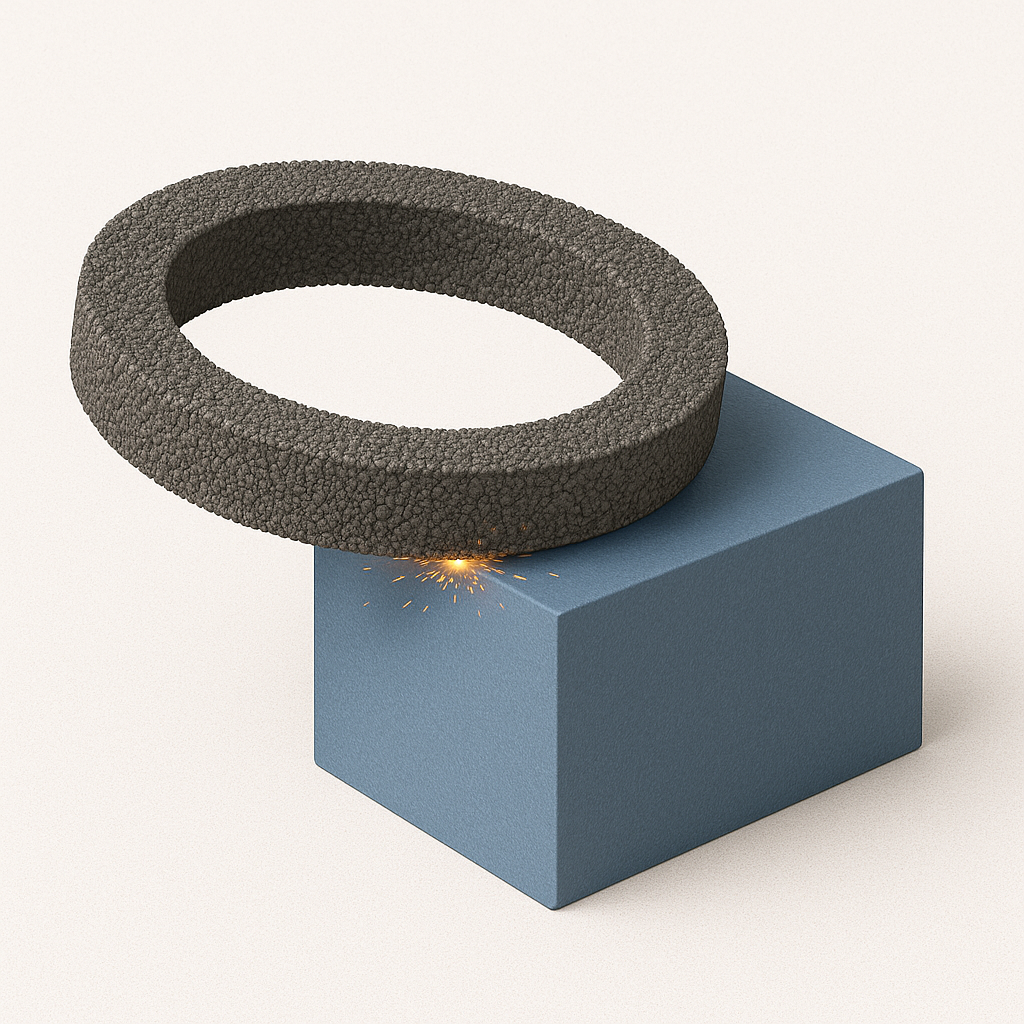

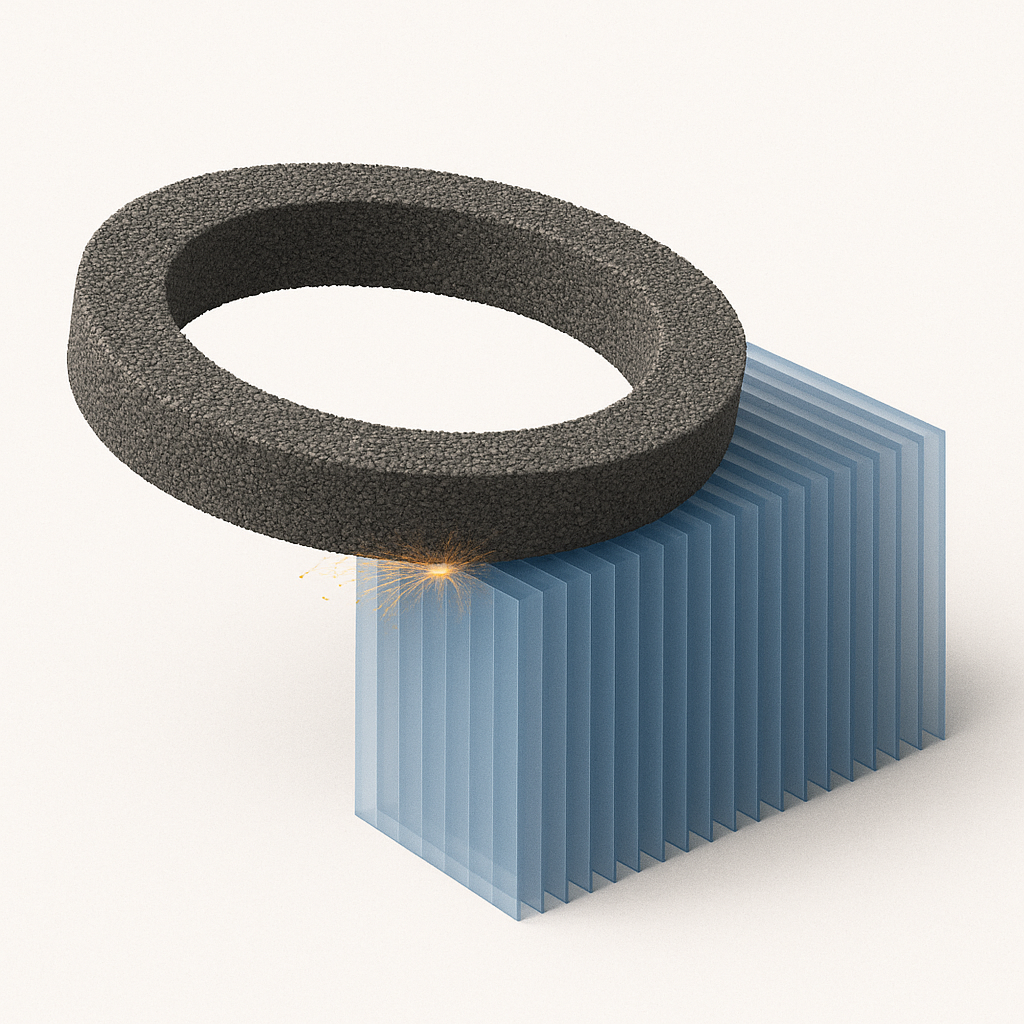

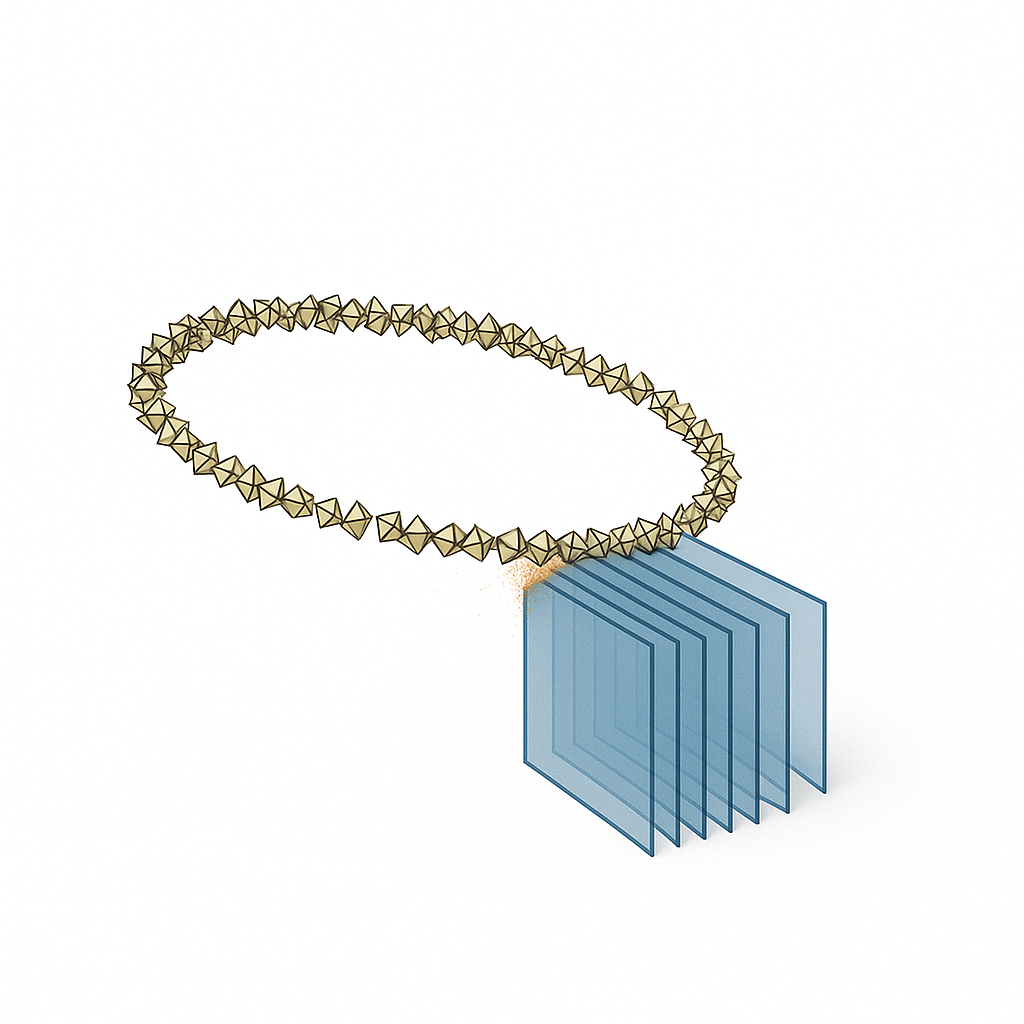

Surface grinding was abstracted in 3 steps as shown in the following graph.

However, doing geometric calculation of two 3D body is very costly, especially given the fact that in the real machining process, there will be 1 million interaction between grains and workpiece per second. To reasonably simulate this in any fashion required a simplification of the problem.

First we simplified the workpiece into collection of 2D planes.

Then we simplified the wheel as a collection of grains, which is of shape tetrahedron and octahedron

Through these simplifications, the problem is simplified from a two rigid body interactions to a 2.5 D simulation problem.

Software design pattern we used

The target of the software redesign was to make it: 1. Easily extendible: meaning very fast to add new features 2. Easily maintainable: debugging, maintaining the code should be fast and when core people leave the team, the knowledge should stay.

The measure of the above criteria is speed. If it's fast to refactor and debug. Then it means we have a good design.

To reach the above two targets, the most important things needed was: * Have a good test coverage * Have a modular design

How we achieved it is by applying the following points:

Architecture Overview

-

Layered Modular Architecture: Clean separation between core framework and the simulation tool box and the specific physical process these process can be applied one.

-

Domain-Driven Design: Clear separation between Geometry, PhysicalObjects, Simulation, and Visualization domains

-

Flexible Composition: Core simulation built around composable Tool, Workpiece, and Kinematics components

Key Design Patterns

Factory Pattern

This is the most fundamental pattern we used throughout the project. It enabled us to make the code base truly object-oriented.

class Shape:

@classmethod

def from_circle(cls, radius: float):

return cls(vertices)

@classmethod

def from_rectangle(cls, width: float, height: float):

return cls(vertices)

@classmethod

def from_file(cls, filepath: str):

return cls(parsed_data)

The benefit of this pattern is:

- Clean separation between "what kind of object" and "how to build it"

- Each factory method handles its own validation and geometric calculations

- Easy to add new construction methods without modifying existing code

Behavioral Patterns: Strategy Pattern via Configuration Classes

This is another pattern that we used widely in the code base.

class SortingStrategy:

algorithm: str # "quicksort", "mergesort", "heapsort"

threshold: int

parallel: bool

class DataProcessor:

def __init__(self, sorting_config: SortingStrategy):

self.config = sorting_config

def process(self, data):

if self.config.algorithm == "quicksort":

return self._quicksort(data)

elif self.config.algorithm == "mergesort":

return self._mergesort(data)

# Strategy selected at runtime

The benefits are:

- Users can optimize performance vs. accuracy trade-offs without code changes

- New algorithms can be added by extending configuration or providing new callables

- No need for complex inheritance hierarchies (leveraging Python's functional capabilities)

- Configuration classes document high-level strategies; callables handle low-level algorithms

- More Pythonic

Structural Patterns: Composition & Data Organization

We even coded our own library for visualization and plotting of 3D objects. For that we introduced the entire structure for plotting to visual elements, similar to the following:

class VisualElement:

"""Base component"""

pass

class Circle(VisualElement):

"""Leaf - single visual element"""

def __init__(self, x, y, radius):

self.x, self.y, self.radius = x, y, radius

class Line(VisualElement):

"""Leaf - single visual element"""

def __init__(self, start, end):

self.start, self.end = start, end

class Drawing(VisualElement):

"""Composite - container of visual elements"""

def __init__(self, elements: List[VisualElement]):

self.elements = elements

def add(self, element: VisualElement):

self.elements.append(element)

# Usage: treat individual elements and groups uniformly

drawing = Drawing([

Circle(0, 0, 5),

Circle(10, 10, 3),

Line((0, 0), (10, 10))

])

Dataclasses for Clean Data Structure

For storing the simulation results and make data analysis out of the box, we designed also the way how the simulation data is organised in the following way.

@dataclass

class SimulationResult:

"""Pure data container with automatic boilerplate"""

timestamp: float

position: Tuple[float, float, float]

velocity: Tuple[float, float, float]

energy: float

# Automatic __init__, __repr__, __eq__ provided

@dataclass

class AnalysisMetrics:

"""Container with lazy-computed properties"""

raw_data: List[float]

_mean: Optional[float] = None

@property

def mean(self):

if self._mean is None:

self._mean = np.mean(self.raw_data)

return self._mean

This enabled us to have:

- Clear data contracts between simulation components

- Automatic serialization/deserialization

- Self-documenting data structures

- Memory efficient (lazy properties only compute when accessed)

- Less boilerplate than traditional classes

All these examples given here is to show how we actually made a massive performance improvement possibile by having really great code design.